The Black Box Problem of AI: Why Explainability is Important in AI systems

- Savneet Singh

- Application , Explainability

- 15 Jan, 2024

The growth of artificial intelligence (#AI) has brought wonderful opportunities, but it has also raised an important question: Can we trust these complex systems if we don’t understand how they work? This is where the idea of the explainability and interpretability of AI systems comes in.

Consider a box of magic that collects information and generates answers, but you have no idea how it got to those conclusions. This is precisely the AI black box problem. A black box is something we can’t see inside of, and the way it converts input into output remains unexplained.

Here is an example: An AI-powered diagnostic tool could find patterns in medical reports like blood reports, family history and MRIs and make predictions on the likelihood of a heart attack. But the lack of transparency in this decision makes it difficult to confirm the tool’s accuracy and the reasoning behind its diagnoses.

Why is explainability in AI important?

Explainability refers to the degree to which the common man can understand the internal mechanics of a machine or deep learning algorithm in simple terms. Here’s how Think20(1) explains it:

“Explainability” is defined in various ways but is at its core about the translation of technical concepts and decision outputs into intelligible, comprehensible formats suitable for evaluation.

On the other hand, interpretability means predicting what’s going to happen in an AI system if any parameters are changed.

Transparent AI is explainable AI. This understanding is vital for building trust, mitigating risks, and ensuring AI aligns with our values. Here is why this is important:

- Developing trust: AI systems frequently rely on complicated algorithms and data structures to make choices. Without understanding these processes, it is difficult to trust their accuracy, especially when dealing with sensitive issues such as using facial recognition for criminal justice or AI-led loan approval systems.

- Ensuring Accountability: Explainability makes developers and organizations responsible for their products. If an AI system makes a biased choice, we may identify the cause of the bias (for example, the reason may be training models on biased and insufficient data).

- Learning and Improvement: Understanding an AI system’s internal operations allows us to discover errors and rooms for improvement. This continual learning cycle is critical for ensuring AI is reliable, current, and relevant.

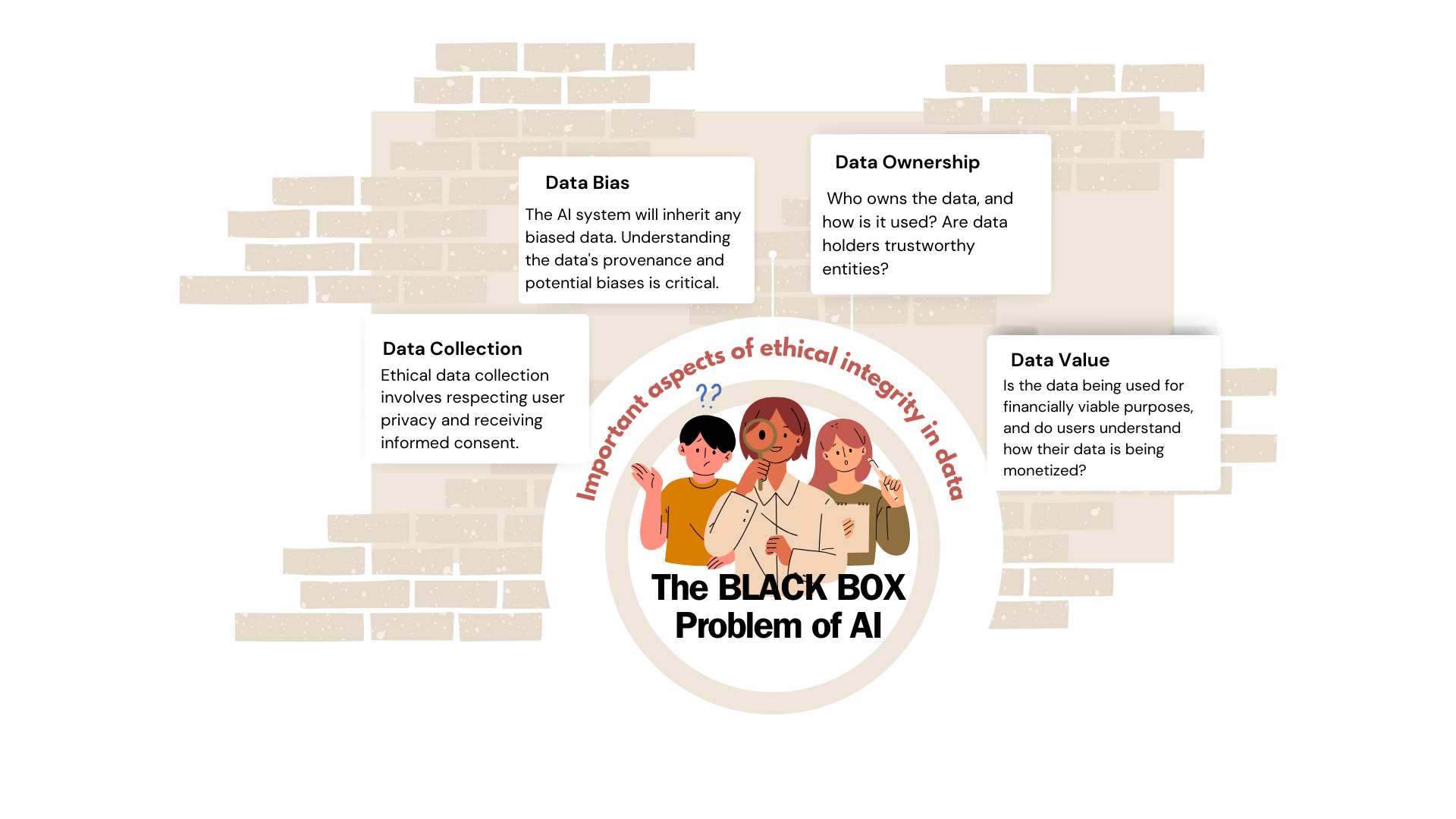

Important aspects of ethical integrity of data

AI systems are powered by data, and the quality of this data can have a major impact on outcomes; thus, organizations need to have explainability around-

- Data Collection: Ethical data collection involves respecting user privacy and receiving informed consent.

- Data Bias: The AI system will inherit any biased data. Understanding the data’s provenance and potential biases is critical.

- Data Ownership: Who owns the data, and how is it used? Are data holders trustworthy entities?

- Data Value: Is the data being used for financially viable purposes, and do users understand how their data is being monetized?

Who are the stakeholders of Explainability and Interpretability?

- AI developers: To optimize and debug, they require in-depth knowledge of the model’s inner workings.

- Business Leaders: They must have a basic understanding of AI’s fundamental principles — not complex algorithms, but enough to make educated decisions about its deployment to ensure the implementation of ethical data practices.

- End Users: Users should understand how the AI system they engage with uses their data. For example, consider how search history influences social media feeds.

We can demystify the black box in AI by adding explainability and interpretability to AI applications, making them fair, trustworthy, and responsible.

Do you see the black box problem with the AI system that you use?